↗ OpenReview ↗ NeurIPS Proc. ↗ Chat

TL;DR#

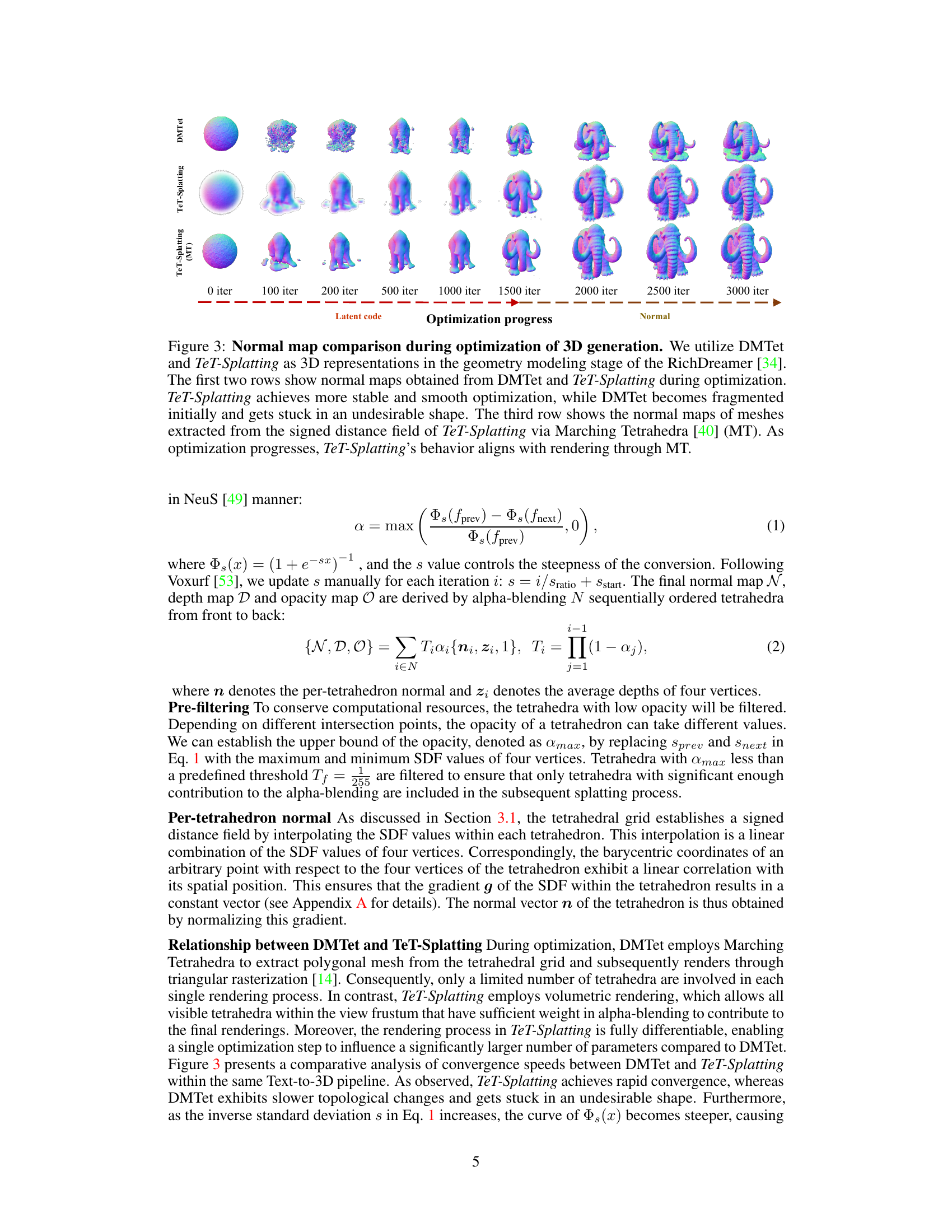

Current 3D generation methods using NeRF suffer from high computational costs and inaccurate mesh extraction, while methods like DMTet struggle with large topological changes and 3DGS lacks precise mesh extraction. These limitations hinder efficient optimization and real-time rendering of high-quality 3D models.

This work presents Tetrahedron Splatting (TeT-Splatting), a new 3D representation that overcomes these limitations. TeT-Splatting combines surface-based volumetric rendering within a structured tetrahedral grid, enabling easy convergence during optimization and precise mesh extraction. A tile-based differentiable tetrahedron rasterizer further enhances rendering efficiency, achieving a superior balance between speed, rendering quality, and mesh accuracy. The method also successfully integrates into existing 3D generation pipelines.

Key Takeaways#

Why does it matter?#

This paper is important because it introduces a novel 3D representation, Tetrahedron Splatting (TeT-Splatting), which offers a superior tradeoff between convergence speed, rendering efficiency, and mesh quality compared to existing methods. This significantly advances 3D generation, particularly in conjunction with high-resolution diffusion models, and opens up new avenues for research in real-time rendering and high-fidelity 3D content creation. The tile-based differentiable rasterizer is also a significant contribution for real-time rendering.

Visual Insights#

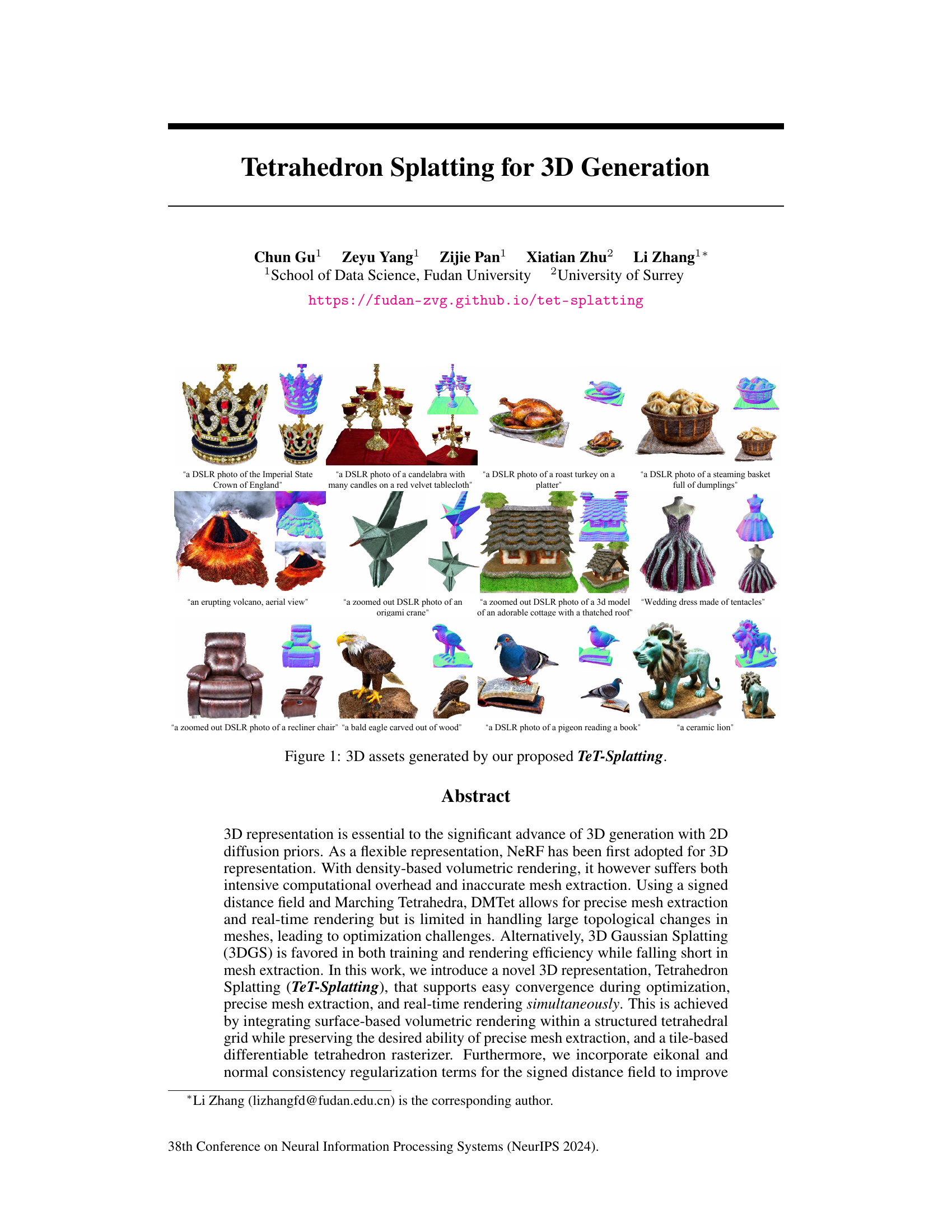

This figure showcases a diverse range of 3D models generated using the TeT-Splatting method. The images demonstrate the variety and quality of 3D assets that can be created using the technique, ranging from realistic objects (such as a crown, turkey, and chair) to more stylized or fantastical items (such as an origami crane and a wedding dress made of tentacles). The figure serves as a visual demonstration of the capabilities of TeT-Splatting in generating high-quality 3D models from various prompts.

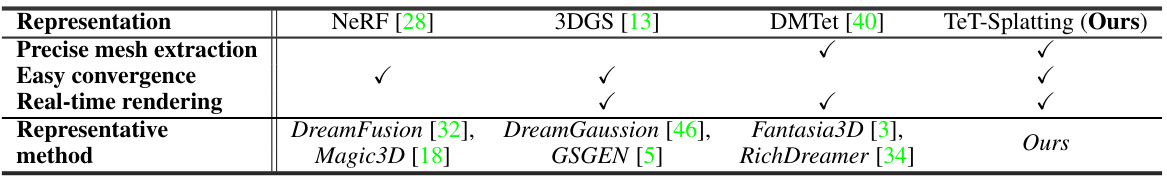

This table compares various 3D representation methods used in 3D generation, highlighting their capabilities in precise mesh extraction, ease of convergence during optimization, and real-time rendering performance. It also lists representative methods that utilize each representation type.

In-depth insights#

Tet-splatting: Intro#

The hypothetical section ‘Tet-splatting: Intro’ would likely introduce the core concept of Tetrahedron Splatting, a novel 3D representation method. It would likely highlight the method’s key advantages over existing techniques, such as improved mesh extraction, faster convergence during optimization, and efficient real-time rendering. The introduction would likely position Tet-splatting within the broader context of 3D generation using 2D diffusion models, emphasizing its unique approach to integrating surface-based volumetric rendering within a structured tetrahedral grid. A concise overview of the underlying mathematical principles and algorithmic design may also be presented, potentially focusing on the differentiable tetrahedron rasterizer and its role in achieving high rendering performance. Finally, a brief roadmap outlining the paper’s structure might conclude this introduction, setting the stage for a more detailed exploration of Tet-splatting’s implementation and experimental results in subsequent sections.

Diff. Tet Raster#

A differentiable tetrahedron rasterizer is a crucial component for efficient and accurate rendering in 3D graphics applications. It’s designed to handle the complexities of tetrahedral meshes, offering advantages over traditional rasterization methods in scenarios involving deformation and dynamic changes. This technique involves projecting tetrahedra onto the screen, calculating the resulting 2D splats or fragments and blending them to create the final image. The key challenge lies in ensuring differentiability, allowing for the effective use of gradient-based optimization techniques during the rendering process. The implementation often uses techniques like tile-based rasterization and pre-filtering to increase efficiency. A fast differentiable tetrahedron rasterizer is essential for real-time rendering and integration with techniques such as signed distance fields, enabling high-quality 3D content generation with precise mesh extraction. The performance tradeoffs between rasterization speed and accuracy are important considerations when designing and implementing these rasterizers, necessitating careful optimization of the algorithm.

3D Gen Pipeline#

A hypothetical 3D generation pipeline likely begins with prompt processing, converting natural language or other input modalities into a structured representation that guides the generation process. This is followed by a geometry generation stage, possibly using a method like Tetrahedron Splatting, to create the initial 3D shape. This stage often involves iterative optimization to refine the geometry based on the initial prompt and potentially incorporating additional constraints, such as eikonal and normal consistency. Then comes the texture generation stage, where the generated geometry is enhanced with surface details and material properties. Techniques such as neural radiance fields or physically-based rendering could be employed. Finally, a mesh extraction and refinement phase extracts a clean and high-quality polygonal mesh from the volumetric representation, suitable for various downstream applications. Post-processing steps, such as smoothing, remeshing, and texture optimization could further enhance the quality of the output. The entire pipeline is likely differentiable, enabling end-to-end training and efficient optimization.

Rich Diffusion#

Rich diffusion models represent a significant advancement in generative modeling, especially for 3D asset creation. They go beyond standard diffusion models by incorporating richer, more nuanced information beyond simple RGB values. This might include features like depth maps, normals, and material properties, enabling the generation of more photorealistic and physically plausible 3D objects. The use of rich diffusion priors offers improved control and fidelity compared to standard diffusion methods, resulting in significantly higher-quality outputs, even with more complex scenes and assets. However, increased computational cost and potential issues with training stability are key challenges associated with such enhanced models, requiring careful consideration of training strategies and computational resources. The ultimate success of rich diffusion models hinges on efficient training techniques and architectural innovations that address these challenges, unlocking their full potential to revolutionize 3D content generation.

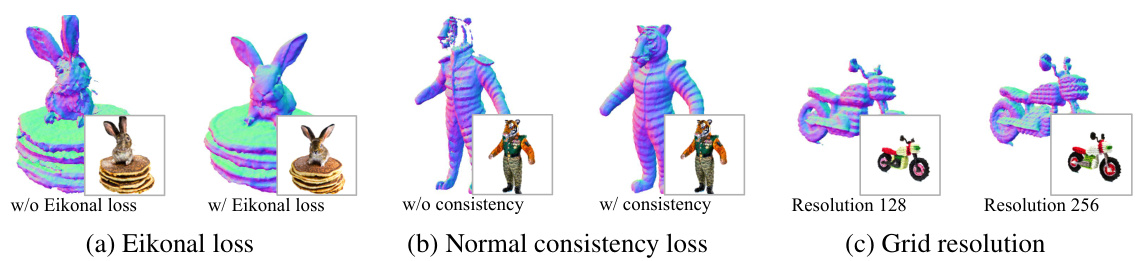

Ablation Study#

An ablation study systematically removes components of a model to assess their individual contributions. In this context, it would likely involve progressively disabling elements of the proposed Tetrahedron Splatting (TeT-Splatting) method, such as the eikonal loss, normal consistency loss, or the tile-based rasterizer, to understand their impact on the model’s performance. The results of such an experiment would reveal which components are crucial for achieving high-quality 3D generation, highlighting the strengths and weaknesses of the proposed approach. Furthermore, the ablation study might explore the effect of the grid resolution on mesh quality and computational speed, providing valuable insights into the trade-offs between accuracy, efficiency, and resource requirements. A thorough ablation study is critical for demonstrating the efficacy and novelty of the TeT-Splatting method compared to existing techniques, enhancing the overall credibility and understanding of the research.

More visual insights#

More on figures

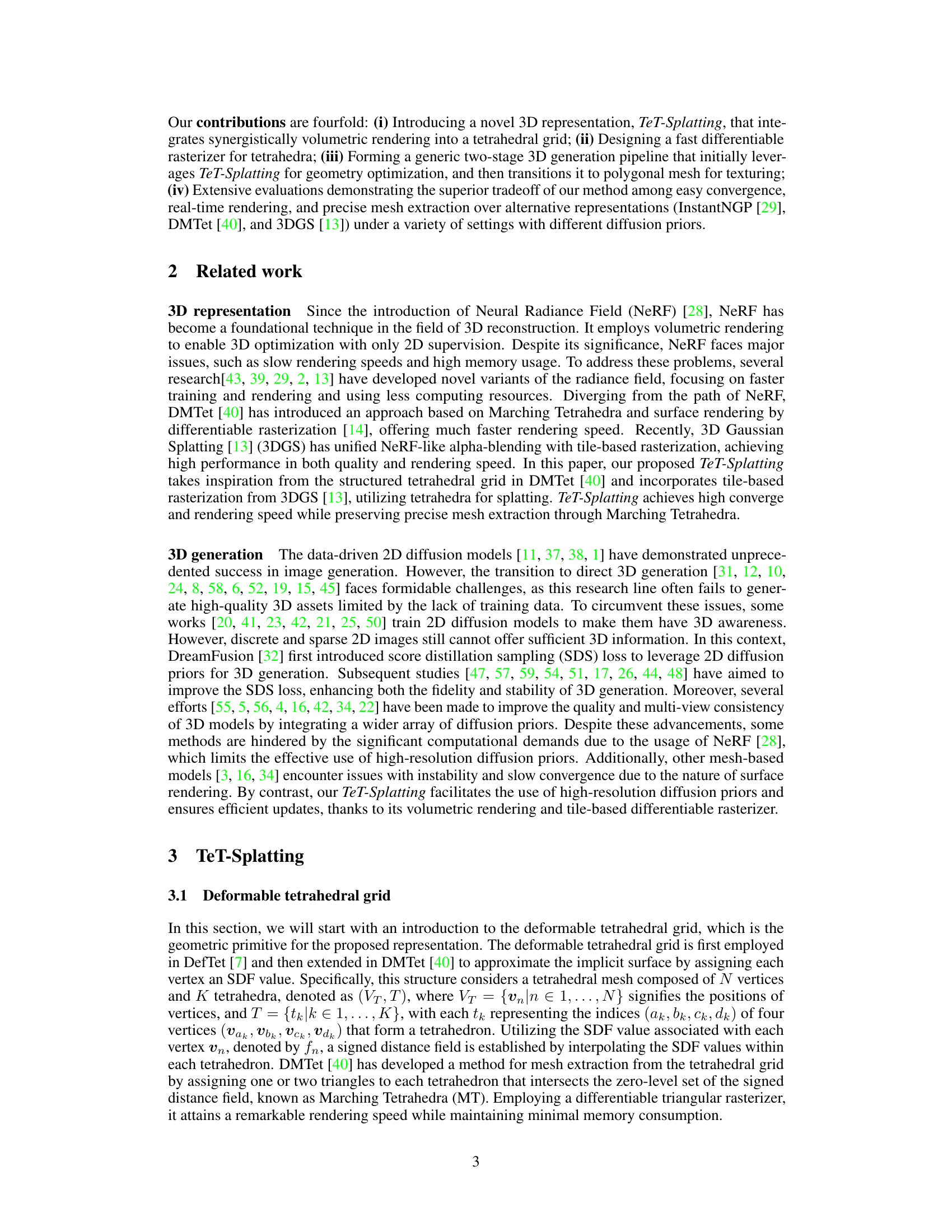

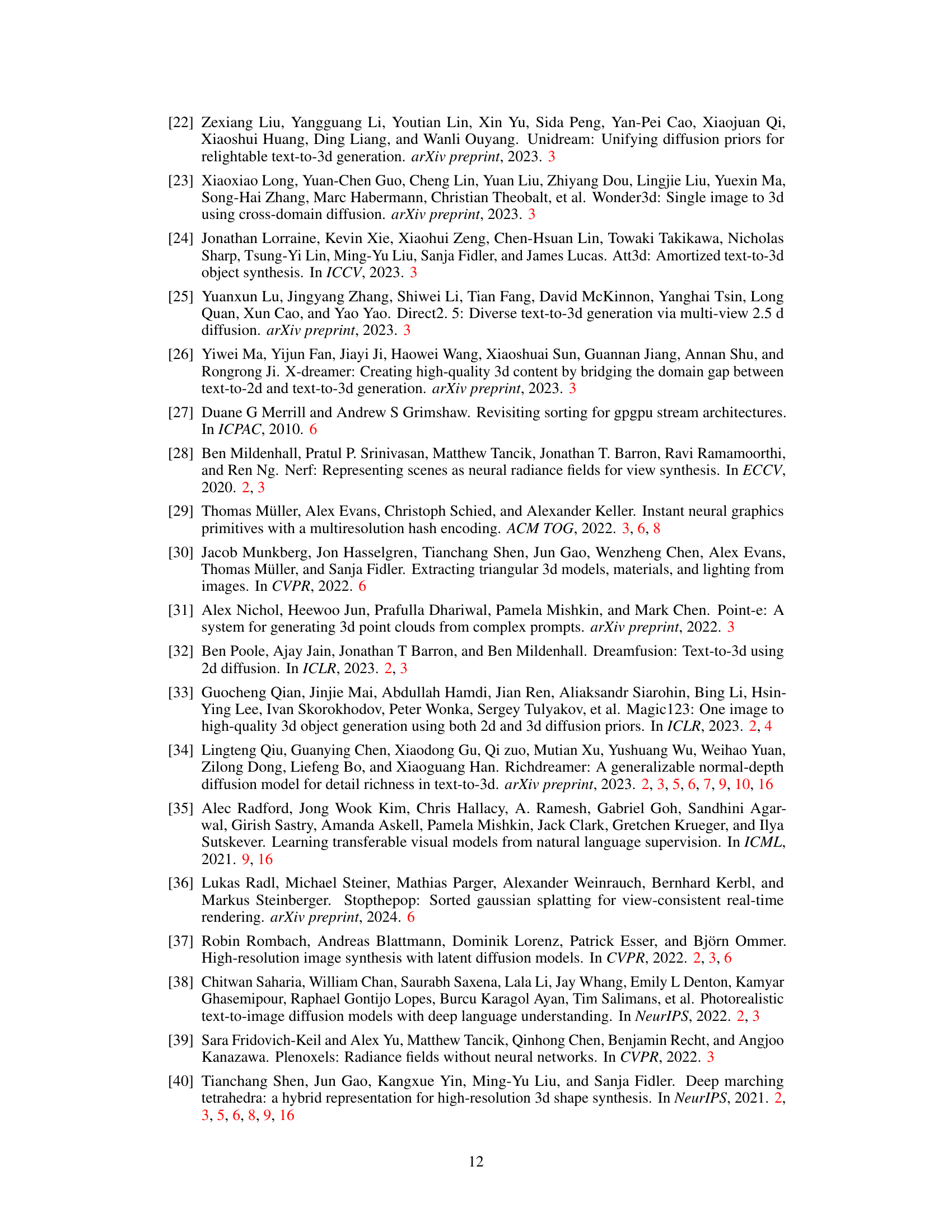

This figure illustrates the TeT-Splatting process. The left panel shows a step-by-step breakdown of the splatting process, starting with a pre-filtering step to remove transparent tetrahedra, and culminating in the generation of normal, depth, and opacity maps from 2D projections of the tetrahedra. The right panel shows how TeT-Splatting is integrated into a two-stage 3D generation pipeline, first optimizing geometry and then refining the texture using a polygonal mesh.

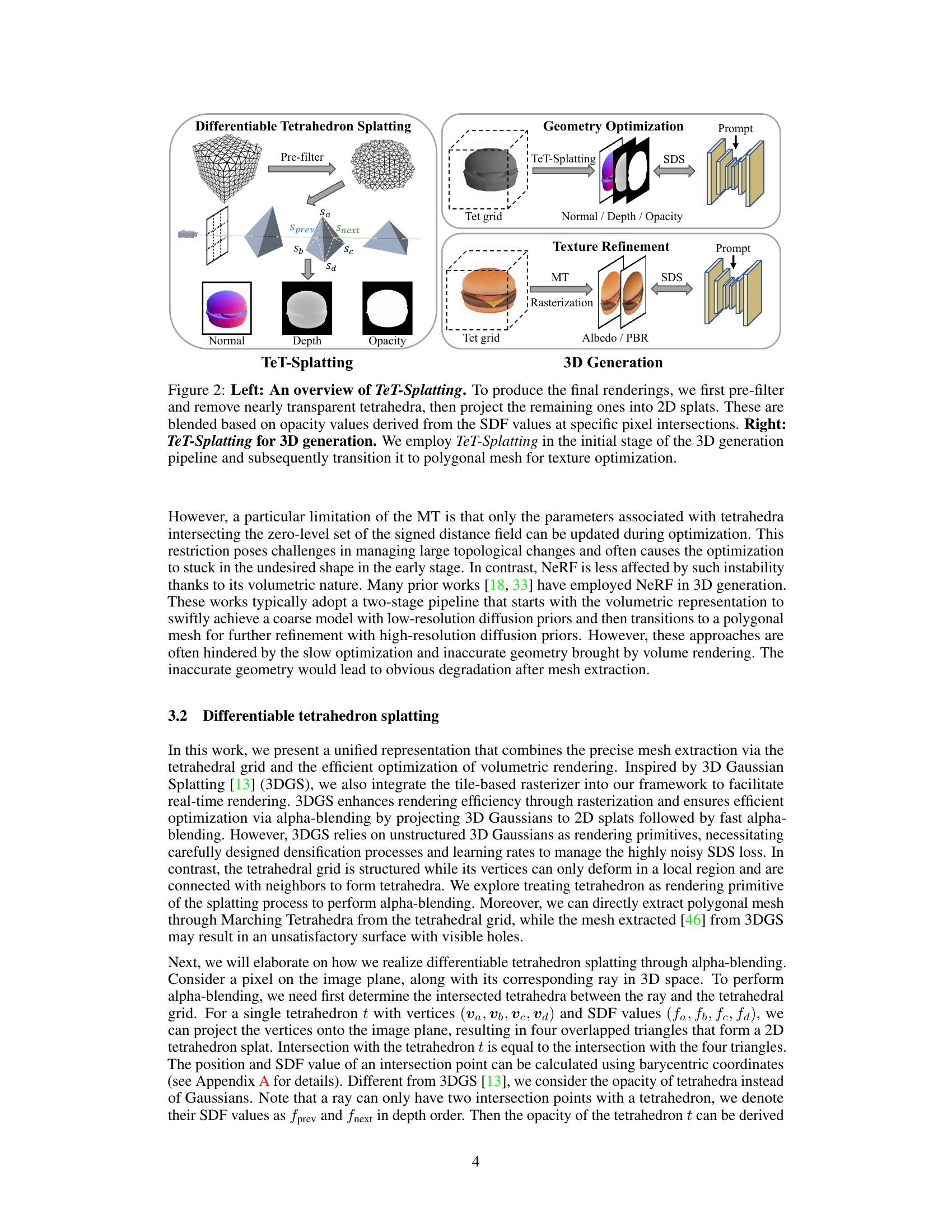

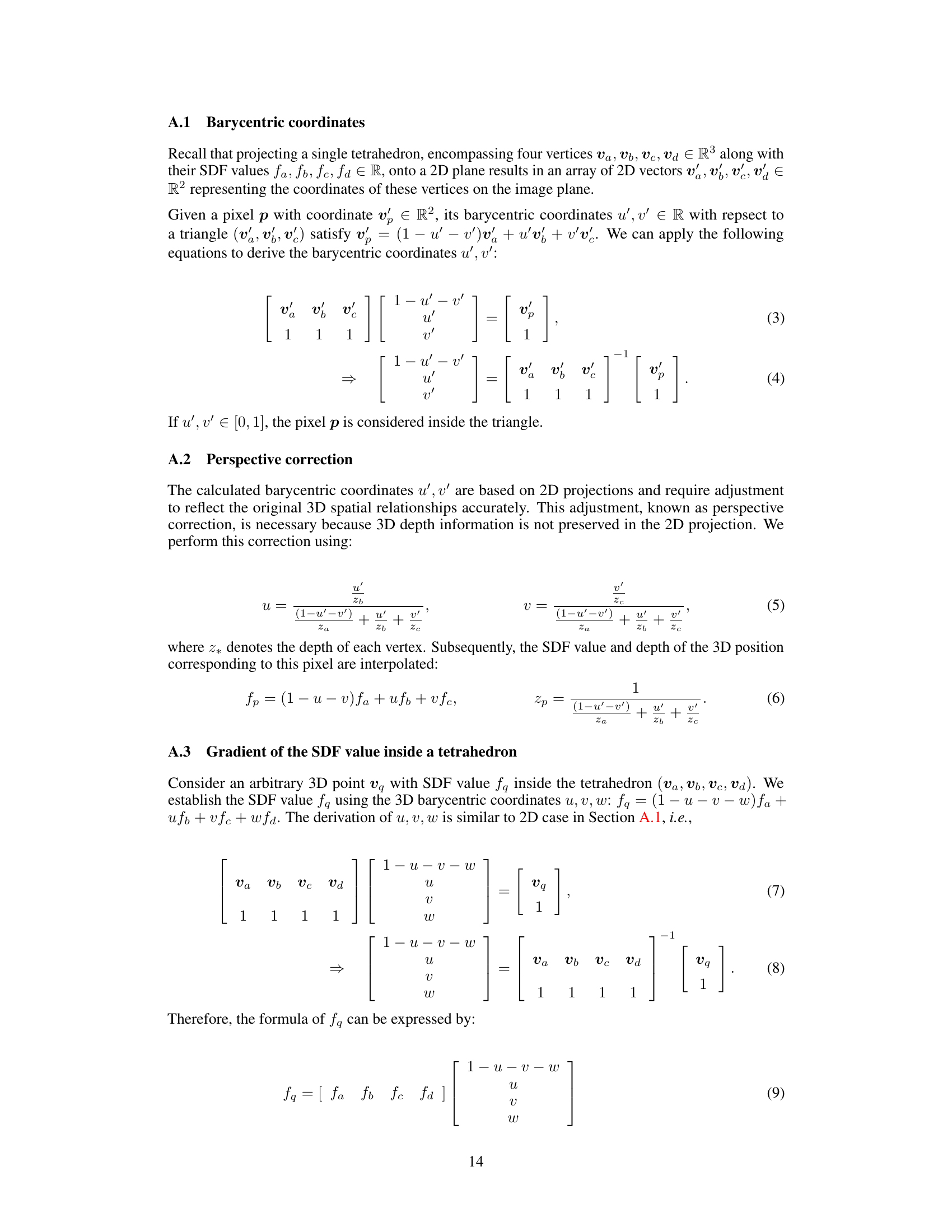

This figure compares the normal map evolution during optimization using DMTet and TeT-splatting methods for 3D generation. It shows that TeT-splatting provides more stable and smooth optimization, while DMTet results in fragmented and unstable results, especially in the early stages. The final row illustrates the alignment of TeT-splatting results with the Marching Tetrahedra (MT) method as optimization progresses.

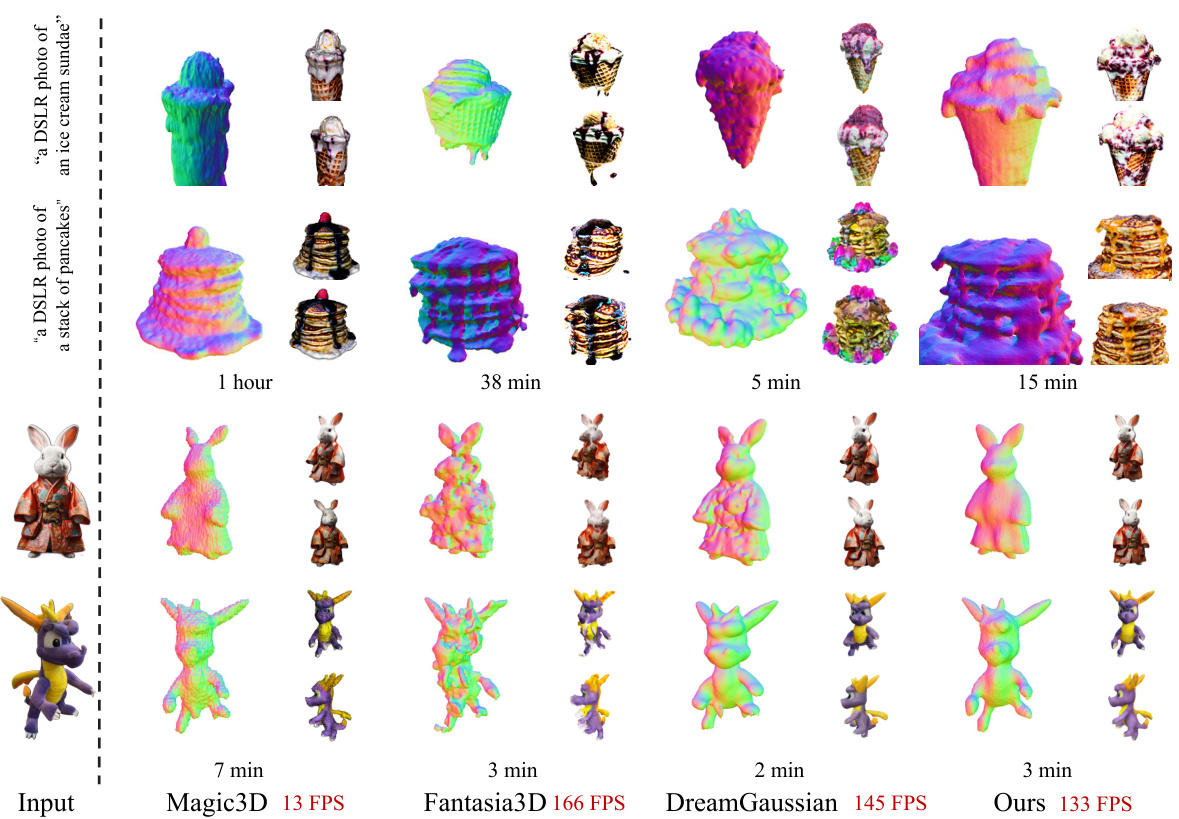

This figure compares the results of four different methods (Magic3D, Fantasia3D, DreamGaussian, and the authors’ method) for 3D generation using vanilla RGB-based diffusion priors. The comparison is shown for two tasks: text-to-3D and image-to-3D. For each method, the figure displays the generated 3D models for several example prompts, along with the training time and rendering speed (frames per second) for the first stage of the generation process. This allows for a visual and quantitative comparison of the different methods.

This figure compares the normal maps of 3D models generated by three different methods (Magic3D, DreamGaussian, and the proposed TeT-Splatting) before and after mesh extraction. It visually demonstrates the quality of the mesh extraction process for each method, highlighting the improved quality and smoothness achieved by TeT-Splatting compared to the other two methods. Note that the normal maps for DreamGaussian are derived from its depth maps, indicating a different approach to normal estimation.

This figure compares the results of four different methods (Prolific Dreamer, MVDream, RichDreamer, and the proposed TeT-Splatting) for 3D generation using rich diffusion priors. It shows the generated 3D models for three different text prompts: a porcelain dragon, a cup of pens and pencils, and a turtle wearing a top hat. The visual quality and the training time required for each method are compared.

This figure compares the normal maps generated by DMTet and TeT-Splatting during the initial stages of training. It visually demonstrates that TeT-Splatting achieves smoother and more stable optimization compared to DMTet, which exhibits fragmentation and gets stuck in undesirable shapes in the early iterations. The comparison is shown for five different object categories: Bear, Eagle, Piano, Tarantula, and Typewriter.

This figure shows the results of the texture refinement stage in the TeT-Splatting 3D generation pipeline. The second stage takes the geometry optimized in the first stage and generates detailed texture maps. The image displays three different maps for a generated 3D asset: the normal map (showing surface orientation), the albedo map (showing base color), and the PBR (Physically Based Rendering) map (incorporating surface properties like roughness and metallicness). This visualization demonstrates the quality and realism achieved in the textured 3D model.

This figure shows the results of applying the TeT-Splatting method to generate 3D assets. Specifically, it visualizes the normal map, albedo map, and physically based rendering (PBR) map for a set of generated 3D objects. The normal map illustrates surface normals, indicating the direction of surface orientation at each point. The albedo map represents the base color of the objects, while the PBR map incorporates additional material properties, resulting in more realistic rendering. This figure demonstrates the TeT-Splatting method’s ability to generate high-quality 3D assets, providing details on surface properties and visual appearance.

This figure presents an ablation study to evaluate the impact of three key components on the performance of TeT-Splatting: eikonal loss, normal consistency loss, and tetrahedral grid resolution. Each row demonstrates the effect of altering one component while keeping others constant. It shows that both eikonal and normal consistency losses significantly improve the quality of the generated 3D models, and increasing the grid resolution leads to more detailed results. The images provide visual comparisons, showcasing differences in the quality of the mesh and surface details for each configuration.

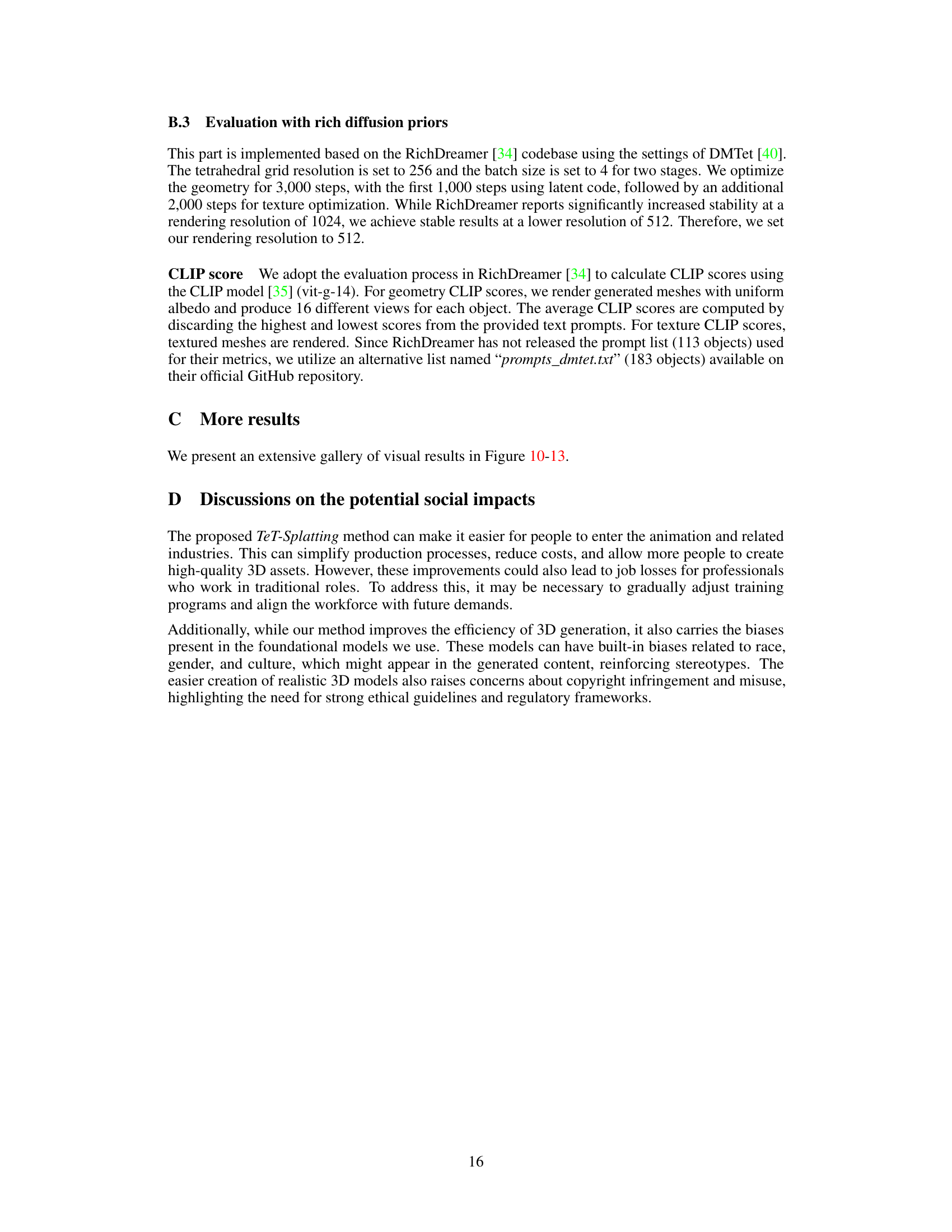

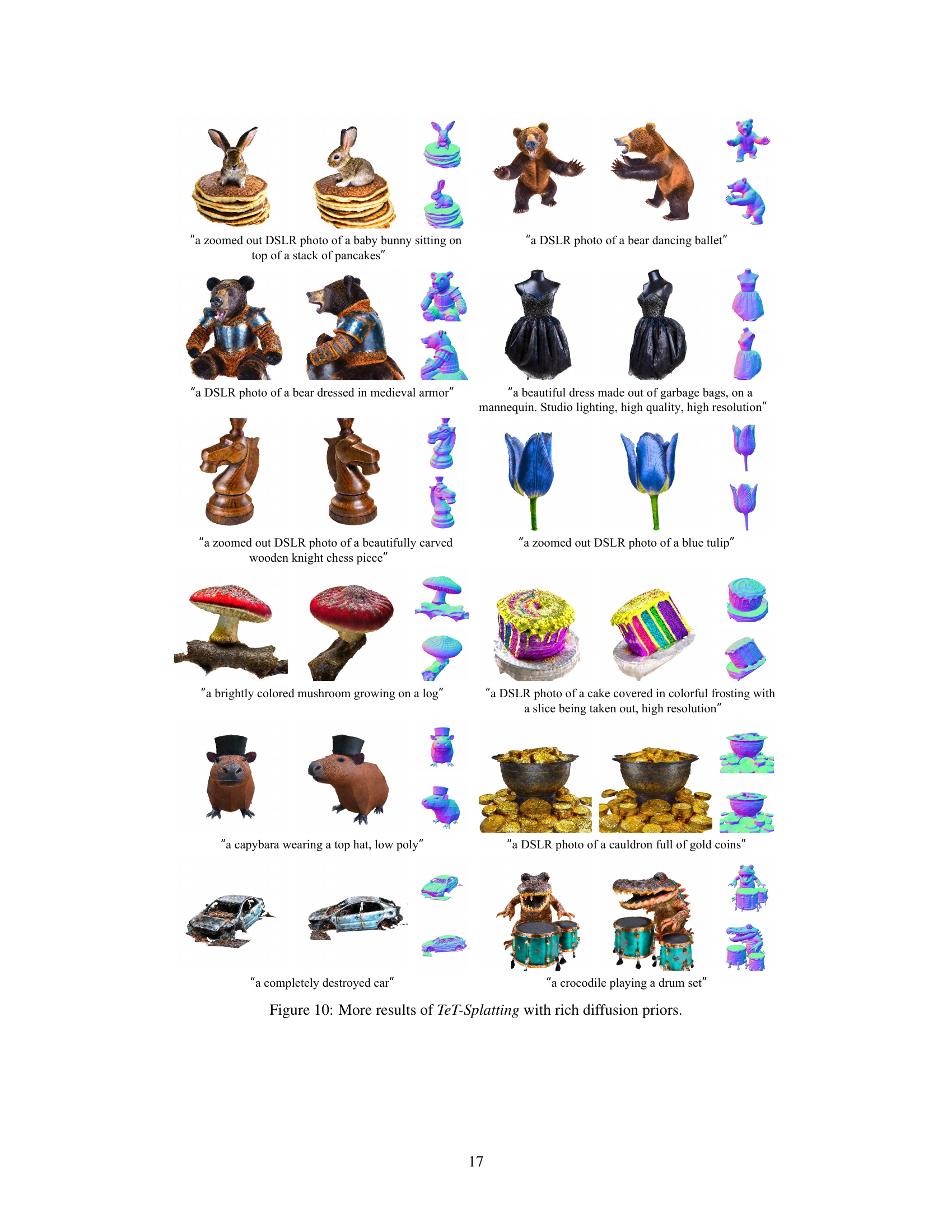

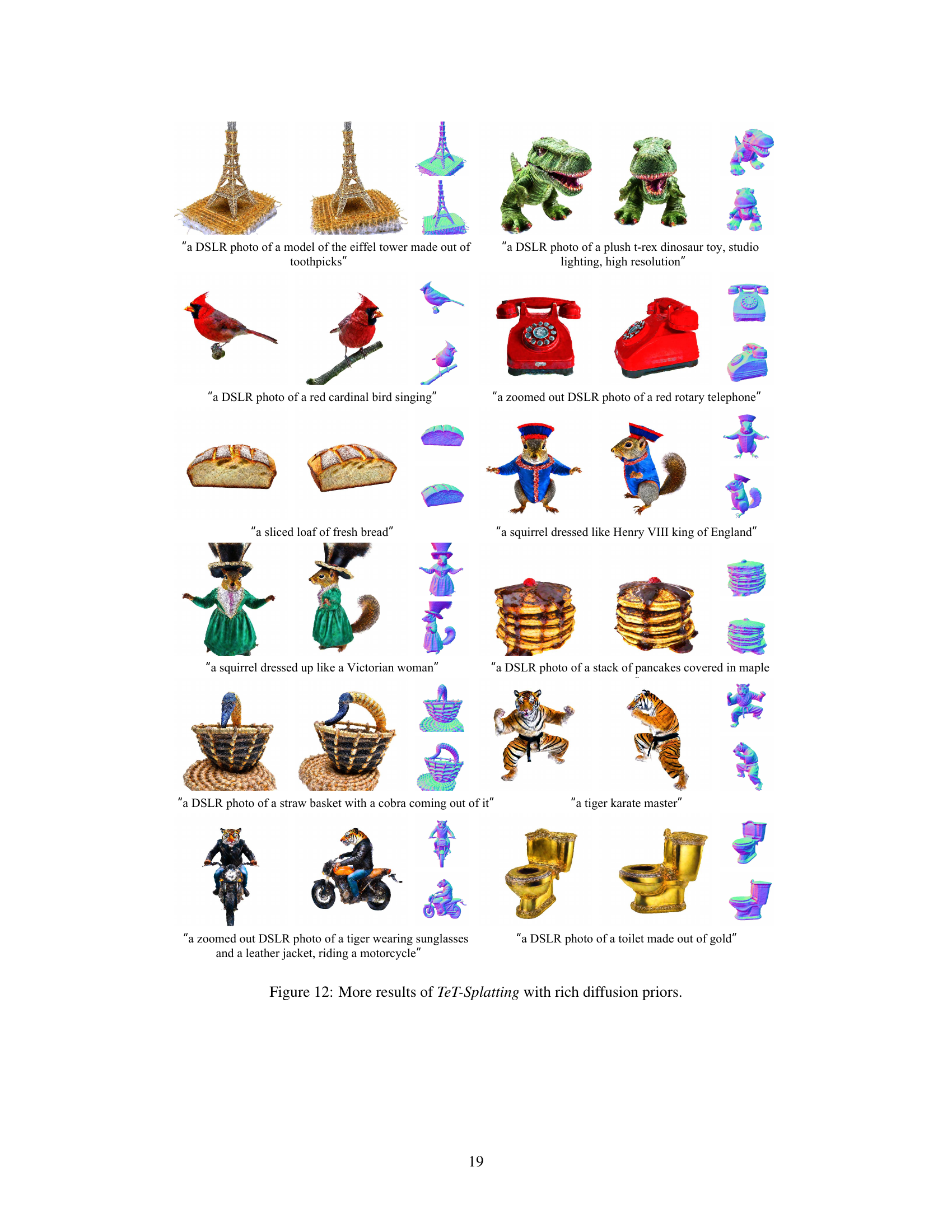

This figure showcases a diverse range of 3D models generated using the TeT-Splatting method. The images demonstrate the technique’s ability to create detailed and realistic 3D assets from various categories, including animals, objects, and scenes. This variety highlights the versatility of the TeT-Splatting approach in generating high-quality 3D content.

This figure compares the normal maps generated during the optimization process of 3D generation using DMTet and TeT-Splatting. It demonstrates that TeT-Splatting provides more stable and smoother optimization compared to DMTet, which tends to get stuck in undesirable shapes. The figure also shows how the normal maps obtained from TeT-Splatting using Marching Tetrahedra (MT) align with the rendering results as the optimization progresses.

This figure compares the normal map evolution during the optimization process for both DMTet and TeT-splatting methods in 3D generation. It demonstrates TeT-splatting’s superior stability and smoothness compared to DMTet, which exhibits fragmentation and gets stuck in suboptimal shapes. The figure also illustrates how TeT-splatting’s normal map aligns with the results obtained through marching tetrahedra (MT) as optimization progresses.

This figure compares the normal maps generated during the optimization process of 3D generation using DMTet and TeT-Splatting. It shows that TeT-Splatting leads to smoother and more stable optimization compared to DMTet, which exhibits fragmentation and gets stuck in unfavorable shapes. The third row shows how TeT-Splatting’s behavior eventually aligns with the results obtained from Marching Tetrahedra (MT) mesh extraction, indicating the consistency and accuracy of its approach.

This figure compares the normal maps generated during the optimization process of 3D generation using DMTet and TeT-Splatting. It shows that TeT-Splatting leads to a smoother and more stable optimization process, unlike DMTet which produces fragmented and undesirable results initially. The third row demonstrates the alignment of TeT-Splatting’s results with Marching Tetrahedra (MT) as the optimization progresses.

This figure showcases a variety of 3D models generated using the TeT-Splatting method presented in the paper. The models depict a wide range of objects, from everyday items (such as food and furniture) to more fantastical creations (like a wedding dress made of tentacles and an erupting volcano). The diversity of the models highlights the versatility and capabilities of the TeT-Splatting technique.

The figure shows a schematic of the TeT-Splatting process, highlighting the pre-filtering step, the projection of tetrahedra into 2D splats, and the alpha-blending process. It also illustrates the two-stage 3D generation pipeline using TeT-Splatting for initial geometry optimization and then switching to polygonal mesh for texture refinement.

This figure showcases a diverse range of 3D models generated using the TeT-Splatting method presented in the paper. The models encompass various objects, demonstrating the versatility of the approach in generating different types of 3D assets. The variety of objects aims to highlight the method’s ability to handle complex geometries and details.

This figure provides a schematic overview of the TeT-Splatting process, showing the pre-filtering step to remove transparent tetrahedra and the projection of remaining tetrahedra into 2D splats for blending. It also illustrates how TeT-Splatting is integrated into a two-stage 3D generation pipeline: first using TeT-Splatting for geometry optimization, then transitioning to polygonal mesh for texture refinement.

This figure shows a schematic overview of the TeT-Splatting method (left) and its integration into a 3D generation pipeline (right). The left panel illustrates how the method works: it starts with pre-filtering tetrahedra, projects them into 2D splats, and blends these splats based on opacity values derived from the signed distance field (SDF). The right panel shows how TeT-Splatting is integrated into a two-stage 3D generation pipeline. First, geometry optimization is performed using TeT-Splatting. Then, this representation is transitioned to a polygonal mesh to perform texture refinement, leading to final 3D generation.

Full paper#