↗ OpenReview ↗ NeurIPS Proc. ↗ Hugging Face ↗ Chat

TL;DR#

Creating realistic sounds in virtual environments is challenging because it requires accurately modeling how sound waves travel and interact with objects. Existing methods for synthesizing sounds often struggle to capture fine details or generalize well to new situations. They often lack physical constraints that should govern sound wave propagation. This has hindered the development of truly immersive audio experiences.

The paper introduces Acoustic Volume Rendering (AVR) to solve these issues. AVR uses a novel approach that models sound waves in the frequency domain, making it more efficient and accurate. The frequency-domain method also incorporates spherical integration to better capture the spatial characteristics of sound. To make the method more accurate, a new physical simulator called AcoustiX is also introduced, which produces more realistic simulation data. The results show that AVR surpasses existing methods in synthesizing impulse responses and provides highly accurate, physics-based rendering.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in spatial audio and computer graphics because it bridges the gap between realistic acoustic modeling and efficient neural rendering. By introducing a novel frequency-domain volume rendering technique and a physics-based simulator, the research opens doors for creating more immersive and believable virtual and augmented reality experiences. Furthermore, the open-sourced code and simulator will greatly benefit the community. This work also paves the way for integrating physical wave propagation principles into other neural field applications, impacting numerous fields.

Visual Insights#

The figure shows the system’s pipeline. The left part shows the top-down view of a room with a speaker and two microphones, indicating impulse responses are captured. The right part visualizes the spatial variation of impulse responses. The synthesized impulse responses at different listener locations are transformed into the frequency domain, showing phase and amplitude distributions.

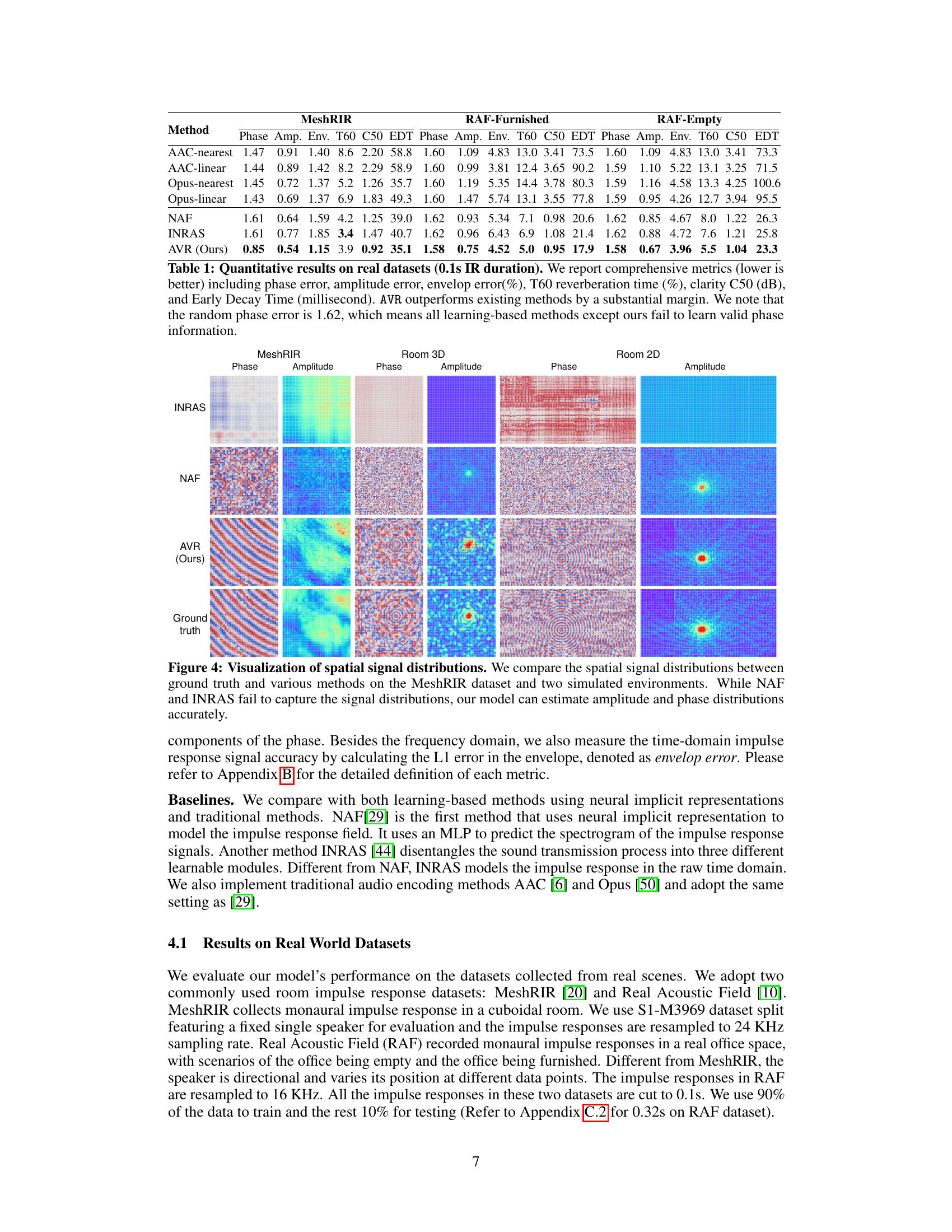

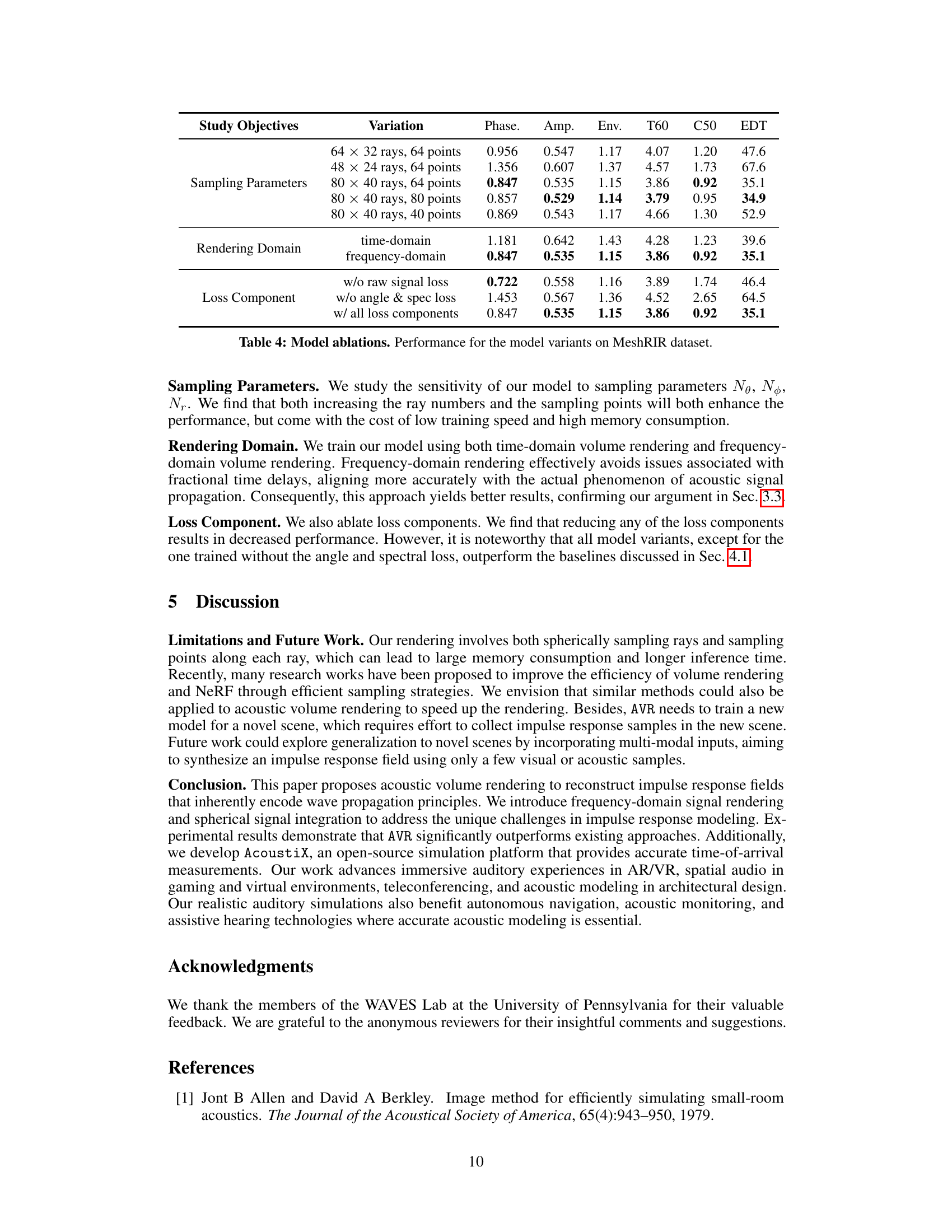

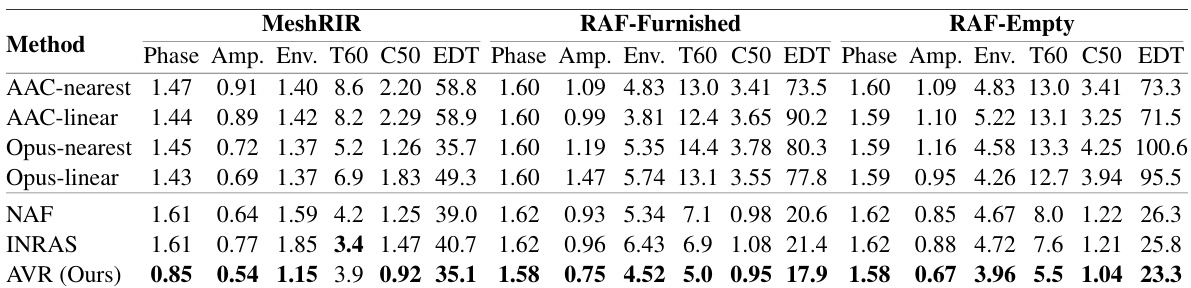

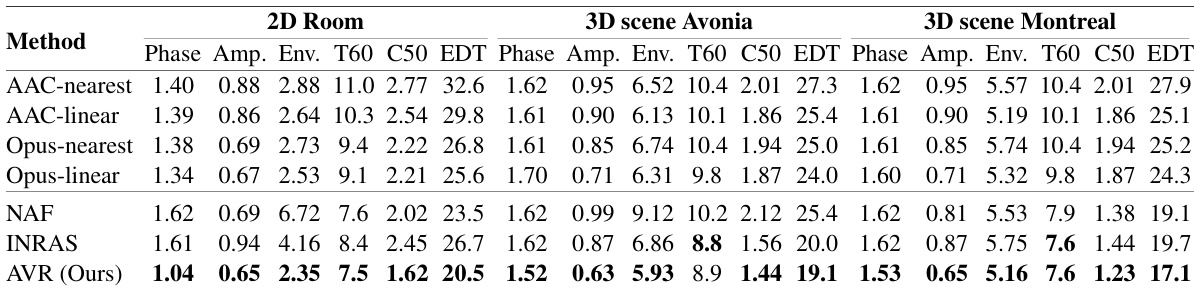

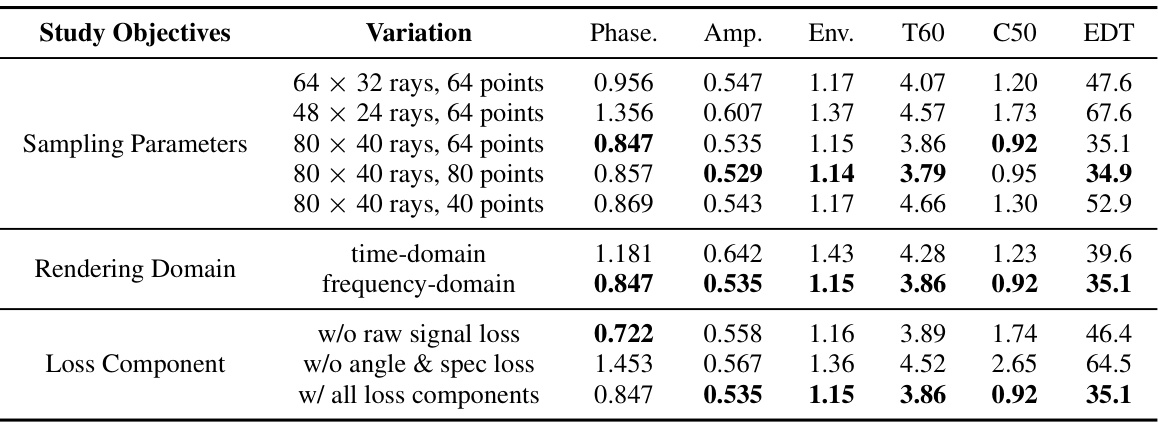

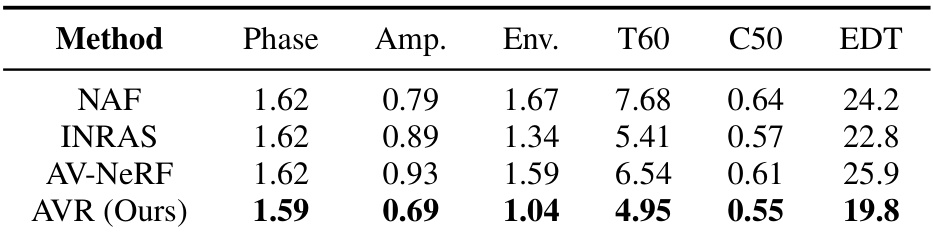

This table presents a quantitative comparison of different methods for synthesizing impulse responses on real-world datasets. The methods are evaluated using various metrics, including phase error, amplitude error, envelope error, reverberation time (T60), clarity (C50), and early decay time (EDT). The results show that the proposed Acoustic Volume Rendering (AVR) method significantly outperforms existing methods, particularly in terms of phase accuracy, indicating its ability to capture the detailed characteristics of impulse responses.

In-depth insights#

Acoustic Vol. Rendering#

Acoustic volume rendering, as a novel concept, merges the established technique of volume rendering with the intricacies of acoustic wave propagation. This innovative approach directly addresses the challenge of synthesizing realistic audio in virtual environments by modeling the acoustic impulse response (IR) as a 3D field. Key advantages include the inherent encoding of wave principles like reflection and diffraction, leading to more accurate and physically consistent simulations compared to previous methods. This is achieved by performing rendering in the frequency domain, incorporating phase shifts to account for wave delays, and utilizing spherical integration to capture signals from various directions. The frequency-domain approach proves particularly useful in handling real-world, discretely-sampled IR data by transforming time delays into phase shifts, which avoids the complexities of time-domain interpolation. Further enhancing realism, the technique can incorporate individual head-related transfer functions for personalized binaural audio synthesis. Overall, acoustic volume rendering presents a significant advancement in spatial audio synthesis with its superior accuracy and potential for creating truly immersive auditory experiences.

Freq-Domain AVR#

The proposed Frequency-domain Acoustic Volume Rendering (AVR) offers a compelling solution to the challenges of traditional time-domain methods in acoustic modeling. By shifting the rendering process to the frequency domain, AVR elegantly addresses the problem of fractional time delays inherent in real-world acoustic wave propagation. This is crucial because discrete sampling in the time domain struggles to accurately capture signals that arrive between sampling intervals. The frequency-domain representation neatly circumvents this issue by transforming the time delays into phase shifts that can be efficiently handled. This is especially beneficial when dealing with the complex wave interactions encountered in reverberant environments. Furthermore, the frequency-domain approach often results in smoother spatial variations in signal characteristics, thus simplifying network optimization and improving generalization performance. This innovative approach demonstrates a significant advance in realistic audio synthesis by directly incorporating the underlying physics of wave propagation within its framework. The use of spherical integration further enhances the accuracy by consolidating signals from all directions, making it ideal for the realistic rendering of binaural audio.

AcoustiX Simulator#

The AcoustiX simulator, as described in the paper, is a significant contribution addressing limitations in existing acoustic simulation platforms. AcoustiX improves accuracy by incorporating physics-based acoustic propagation equations, resolving issues like inaccurate time-of-flight calculations and phase errors prevalent in other simulators. This enhanced realism is crucial for training neural impulse response (IR) models, as inaccuracies in simulation data can lead to poor generalization and reduced performance in real-world applications. The use of the Sionna ray tracing engine provides a robust foundation for efficient simulation. The platform’s compatibility with Blender allows for user-friendly creation and integration of 3D models, enhancing its usability and accessibility. The ability to simulate various wave interactions (reflection, scattering, diffraction) further increases its versatility. Providing more accurate impulse responses directly benefits the training of models aiming to synthesize realistic spatial audio, leading to significant improvements in the fidelity and accuracy of spatial audio synthesis.

Real-World Results#

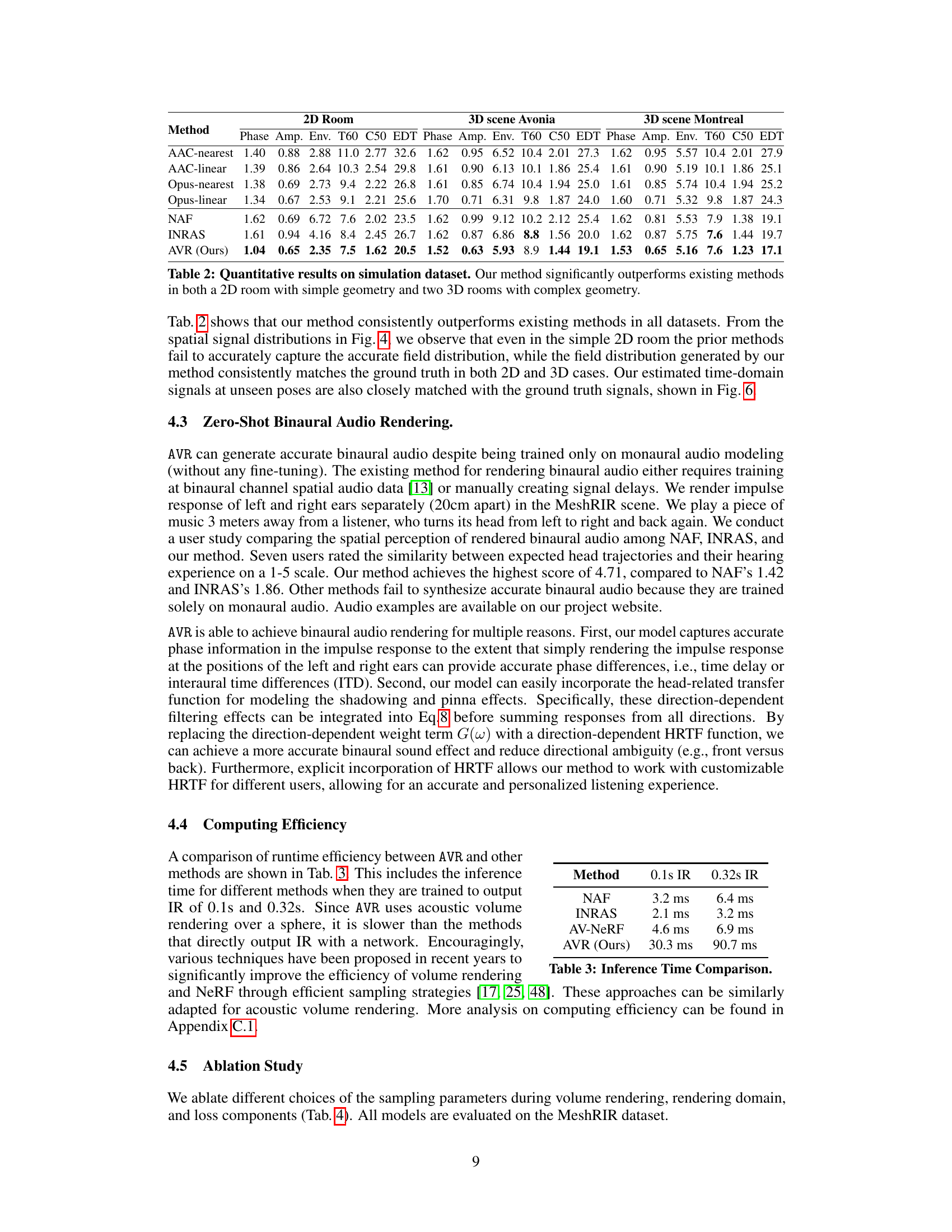

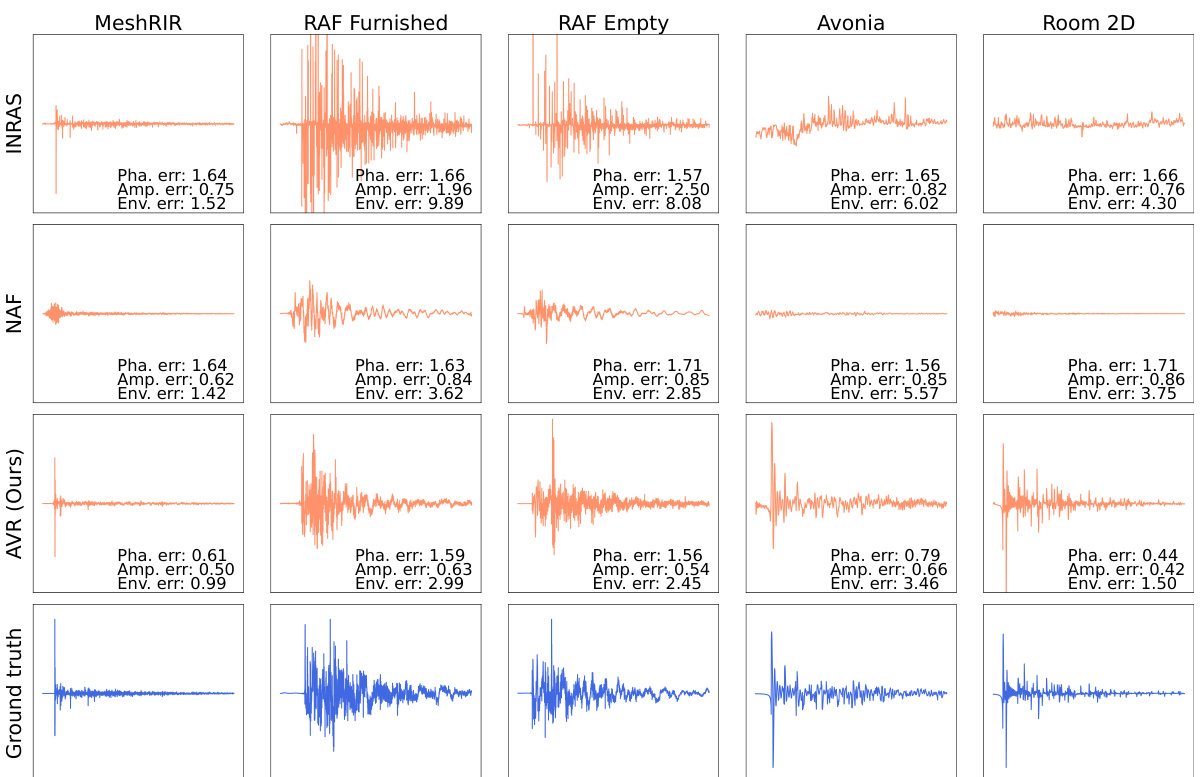

In evaluating the efficacy of a novel method for acoustic impulse response synthesis, a dedicated section on ‘Real-World Results’ would be crucial. It should present a rigorous comparison against existing state-of-the-art techniques using real-world datasets. The section should detail the metrics employed for evaluation, such as signal-to-noise ratio, perceptual metrics like clarity, and spatial accuracy measurements. Key to the credibility of the results is a thorough explanation of the datasets used, including their diversity and limitations. It’s important to showcase the model’s performance in diverse acoustic environments, which would demonstrate its robustness. Visualizations are also needed, perhaps using spectrograms or impulse response waveforms, comparing generated outputs to ground truth recordings from different viewpoints in the real world. This section should also address any challenges encountered in applying the method to real-world data, and provide potential explanations for discrepancies. The focus must be on objectively demonstrating the method’s ability to generalize to unseen real-world scenarios, thus establishing its practical value.

Future Research#

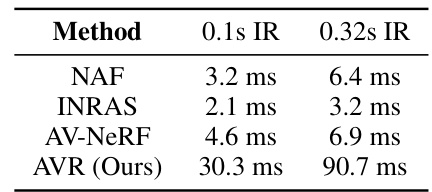

Future research directions stemming from this work on acoustic volume rendering could explore several avenues. Improving efficiency is crucial; current methods, while accurate, are computationally expensive. Investigating more efficient neural architectures or sampling strategies would be beneficial. Generalization to unseen scenes is another important goal; current models require training data for each specific environment. Techniques allowing for zero-shot or few-shot learning, potentially incorporating multi-modal data (visual cues), would significantly improve applicability. Furthermore, incorporating more sophisticated physical models of sound propagation (e.g., diffraction and scattering effects) could lead to even higher fidelity audio synthesis. Finally, exploring applications beyond virtual and augmented reality are promising; examples include advancements in architectural acoustics and audio-visual scene reconstruction.

More visual insights#

More on figures

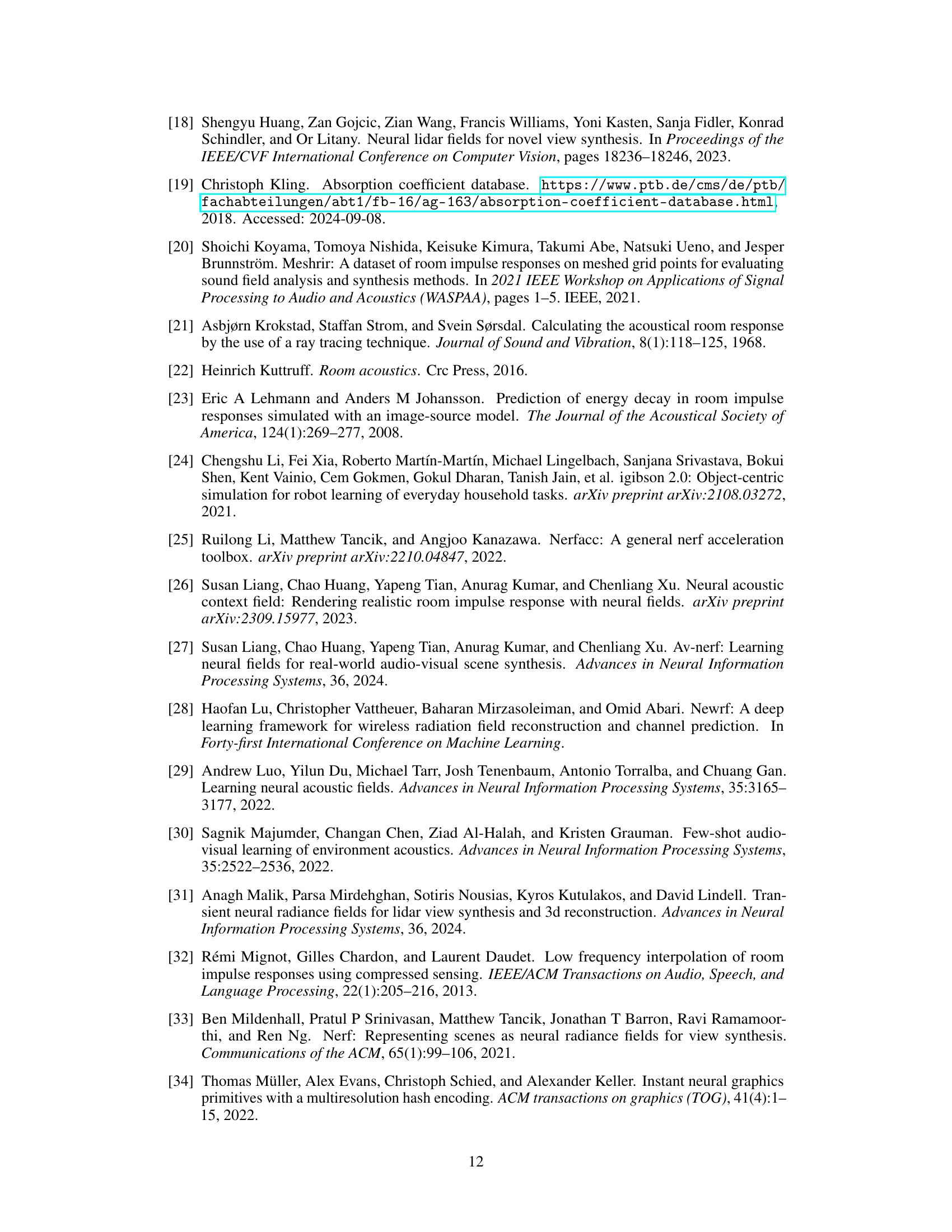

This figure compares the accuracy of time-of-flight estimations between SoundSpaces 2.0 and the proposed AcoustiX simulator. It plots simulated time-of-flight against the ground truth time-of-flight for varying emitter-listener distances. The results show that SoundSpaces 2.0 has significant errors, especially at shorter distances, whereas AcoustiX provides far more accurate estimations, demonstrating its improved simulation capabilities.

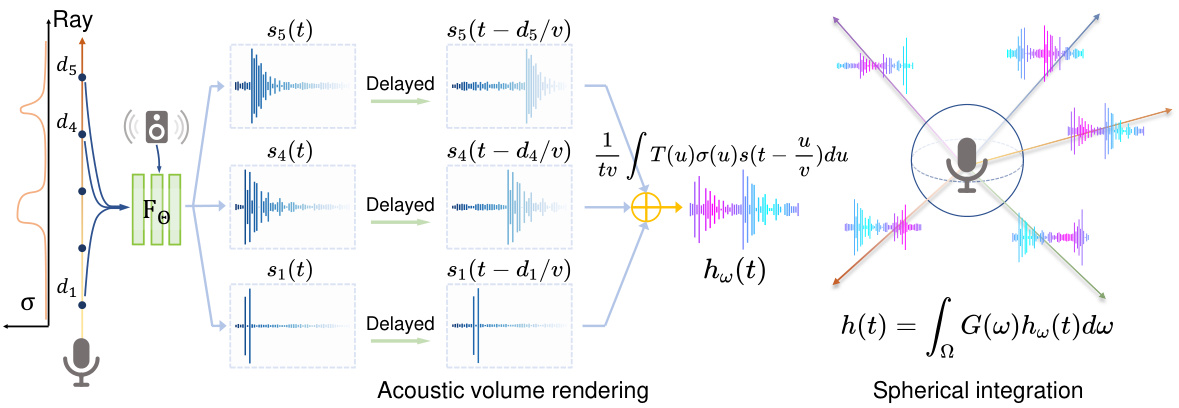

This figure illustrates the pipeline of the proposed Acoustic Volume Rendering (AVR) method. It shows how the method works step by step, starting from sampling points along a ray from the microphone to obtaining the final rendered impulse response. The key steps include querying a neural network to get signals and density, applying time delay to account for wave propagation, performing acoustic volume rendering for each ray, and integrating signals from all directions using a gain pattern to get the final impulse response. The figure provides a clear visualization of the entire process, making it easier to understand the core idea of the AVR method.

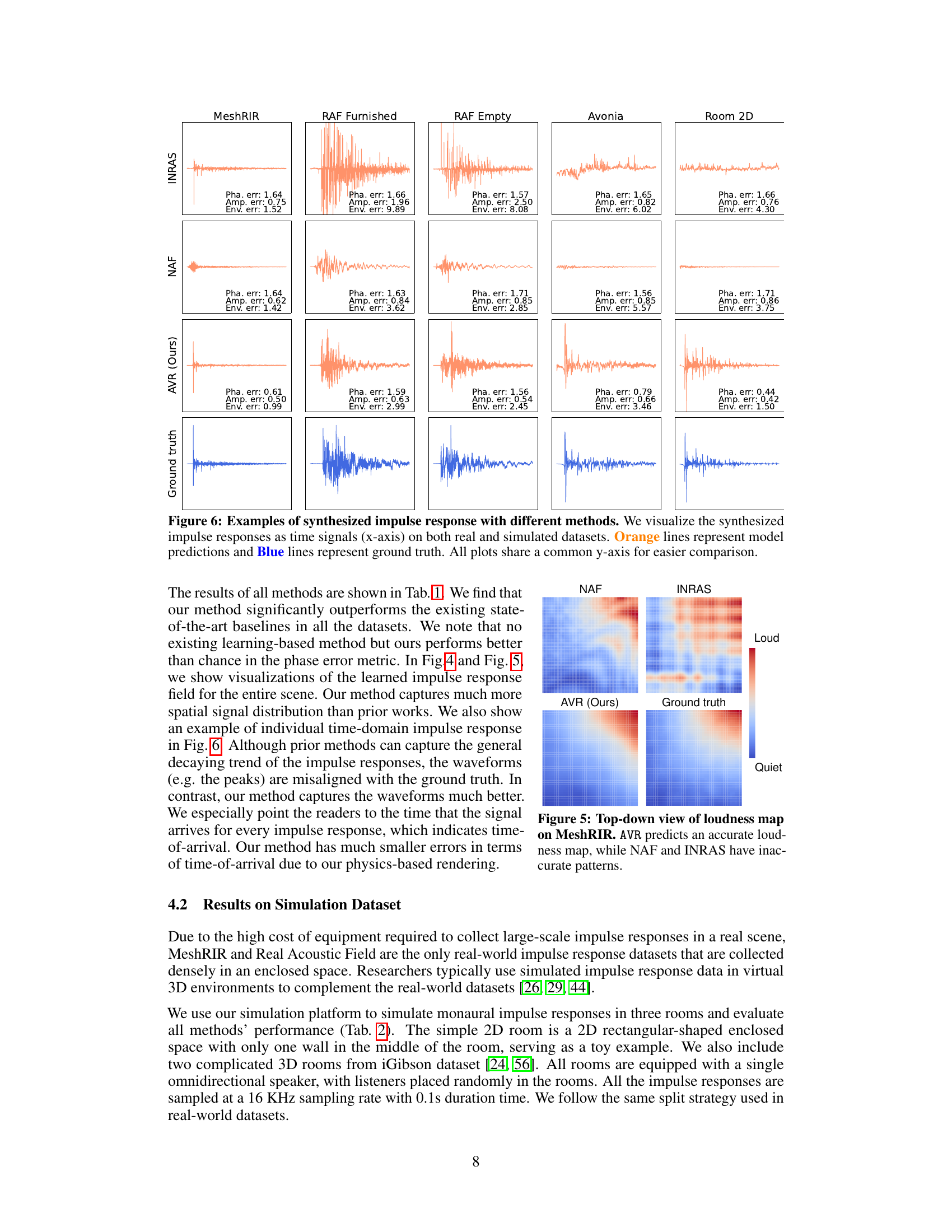

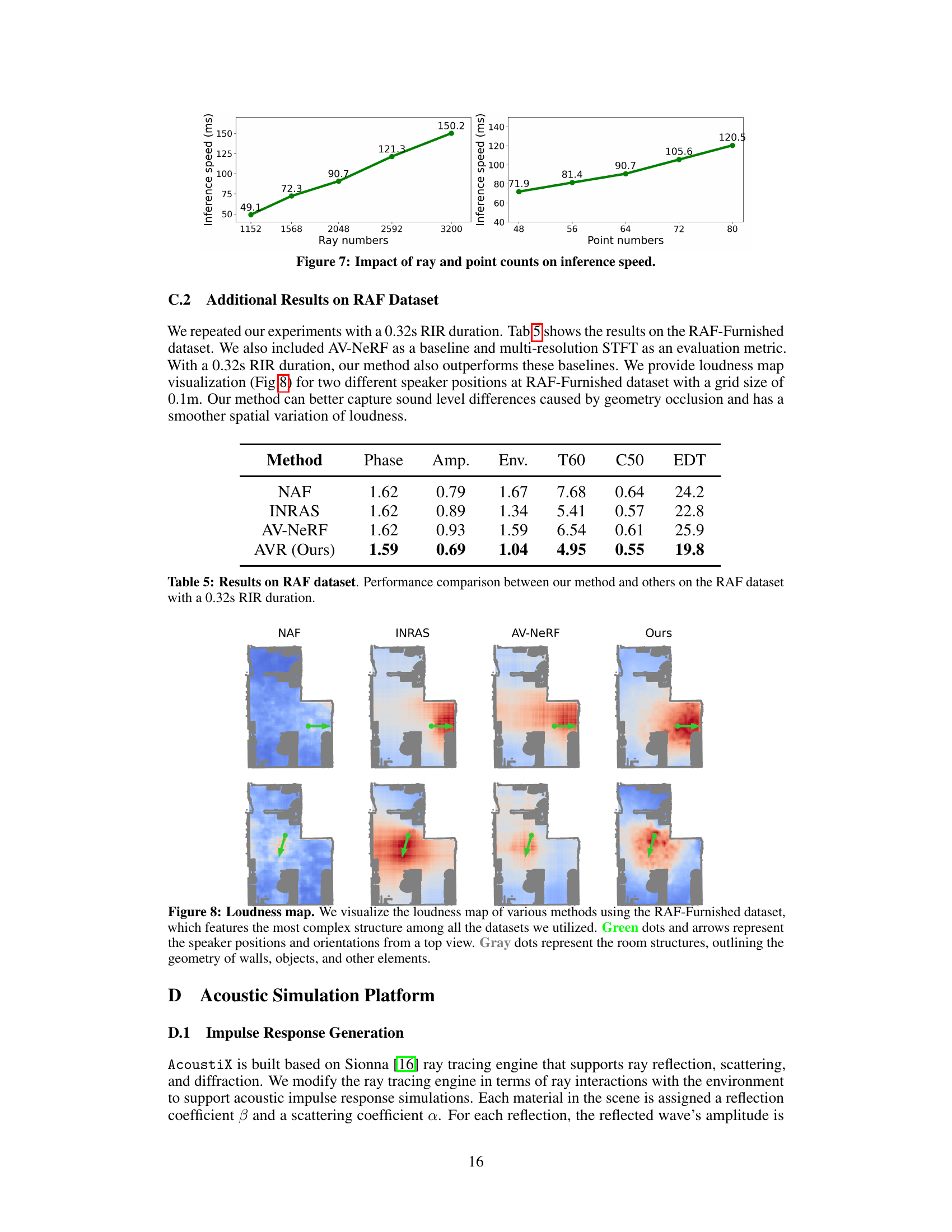

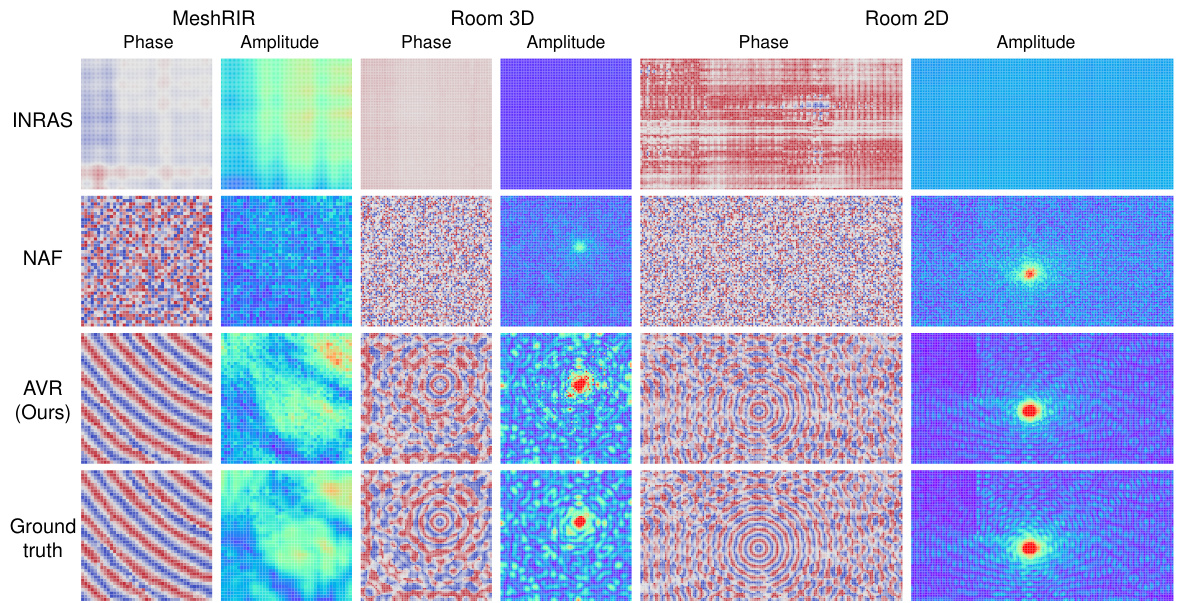

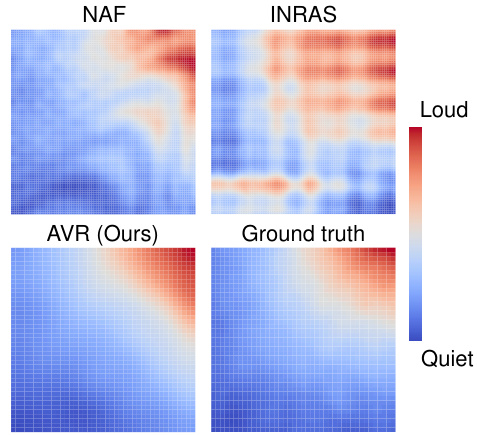

This figure compares the spatial distribution of signals (both amplitude and phase) generated by different methods against the ground truth. The comparison is done across three different datasets: MeshRIR and two simulated environments. The visualization shows that the proposed method (AVR) accurately captures the signal distribution, unlike existing methods (NAF and INRAS) that fail to capture the detailed characteristics.

The figure shows the model’s ability to synthesize impulse responses at novel listener positions based on observations from a speaker. The left panel depicts the general process, where observations from different positions are used to construct an impulse response field. The right panel shows a visualization of the spatial variation of impulse responses, which is visualized in the frequency domain to highlight phase and amplitude at specific wavelengths. The visualization helps demonstrate how the proposed method can accurately capture spatial variation of impulse responses.

This figure compares the spatial distribution of signals from different methods (NAF, INRAS, AVR, and Ground Truth) on the MeshRIR dataset and two simulated environments. It visualizes the amplitude and phase of the impulse responses across different spatial locations. The comparison highlights that AVR accurately captures the detailed signal characteristics, unlike NAF and INRAS which struggle to represent the spatial signal variations properly. This demonstrates the superior performance of AVR in modeling the complex spatial variations of impulse responses.

This figure illustrates the Acoustic Volume Rendering pipeline proposed by the authors. It depicts the process of generating acoustic impulse responses by sampling points along rays, applying time delays for wave propagation, performing volume rendering, and integrating signals from all directions using spherical integration.

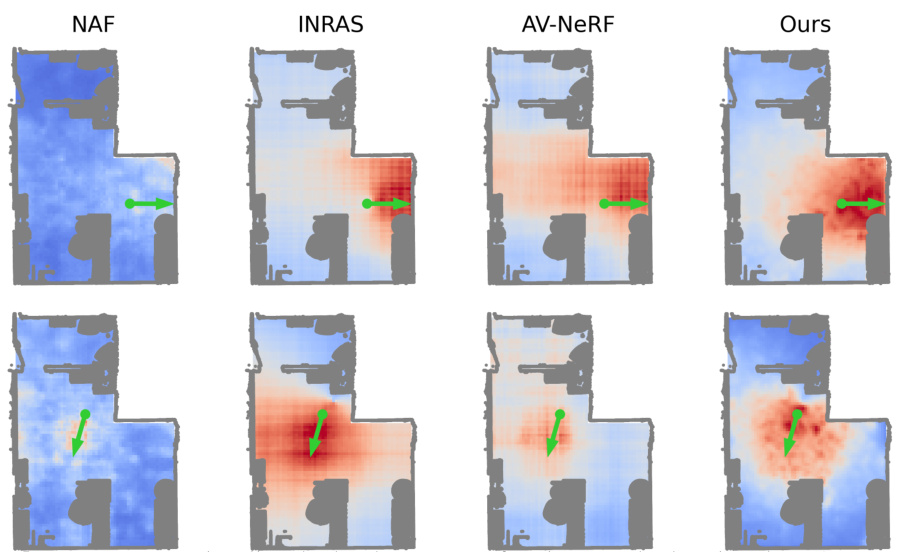

This figure compares the spatial distribution of acoustic signals generated by different methods (NAF, INRAS, AV-NeRF, and the proposed method) with ground truth on MeshRIR and simulated environments. It visualizes both amplitude and phase distributions in the frequency domain, highlighting the superior accuracy and detail of the proposed method in capturing the complex spatial variations of acoustic signals.

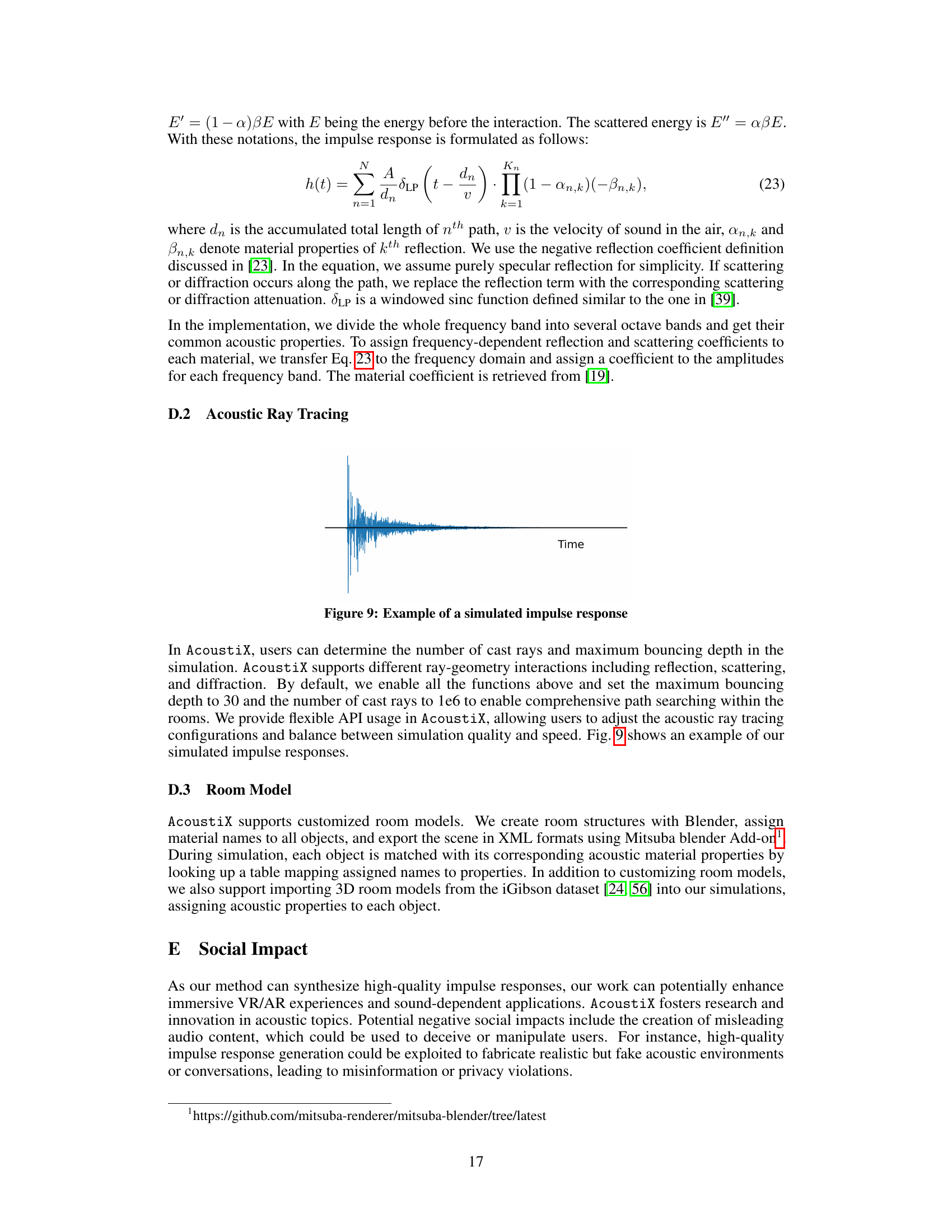

This figure shows an example of a simulated impulse response generated by AcoustiX, the acoustic simulation platform developed by the authors. The waveform depicts the time-varying signal received at a listener’s position, showcasing the complex interactions of sound with the environment (reflections, scattering, diffraction). The initial, strong peak represents the direct sound arrival from the source, while the subsequent oscillations and decay illustrate the effects of environmental reflections and reverberation. The figure highlights the ability of AcoustiX to generate realistic and detailed impulse responses.

More on tables

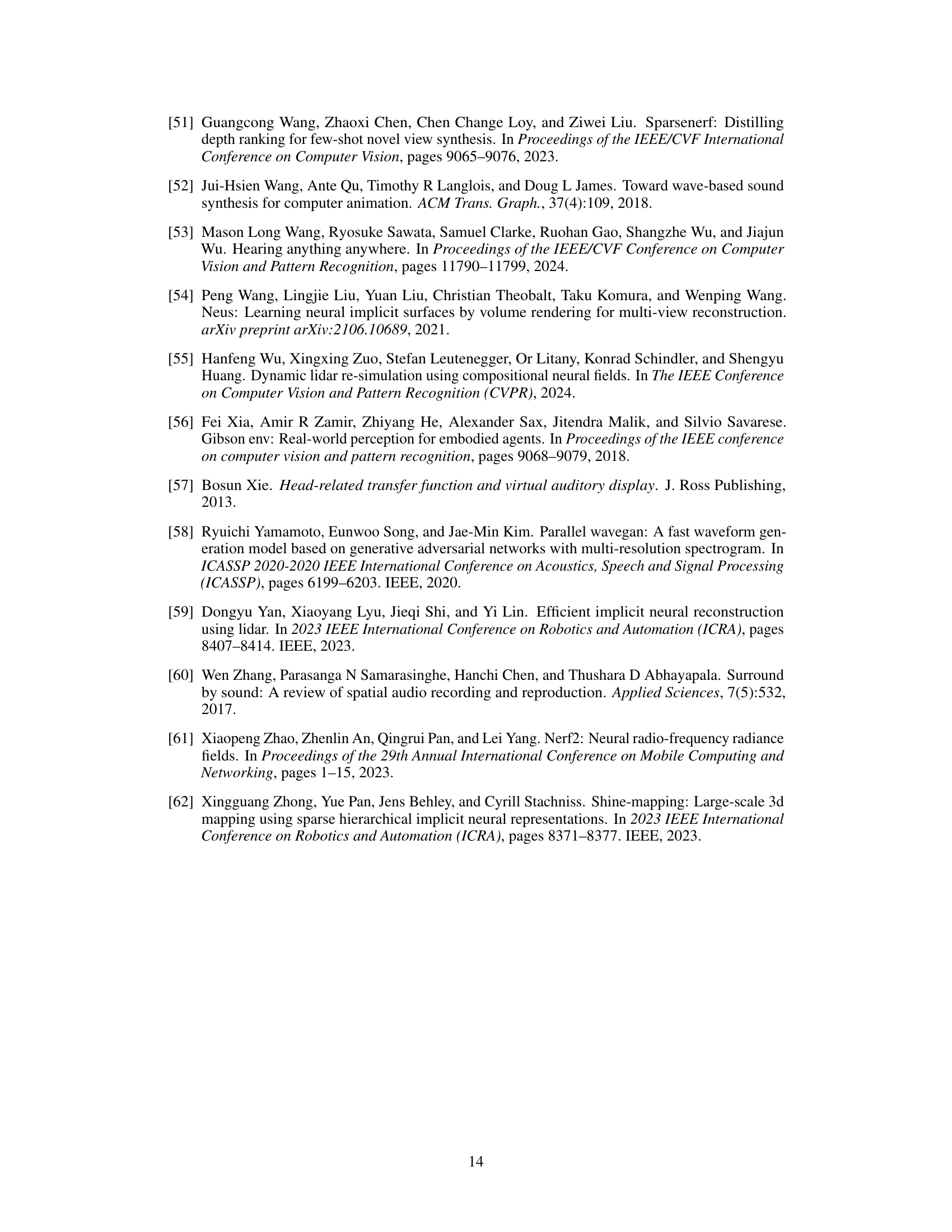

This table presents a quantitative comparison of the proposed Acoustic Volume Rendering (AVR) method against several baseline methods on real-world datasets. The evaluation metrics assess various aspects of impulse response synthesis accuracy, including phase and amplitude errors, envelope error, reverberation time, clarity, and early decay time. The results demonstrate AVR’s significant performance advantage over existing techniques, particularly in accurately capturing the phase information of the impulse responses.

This table presents a quantitative comparison of different methods for synthesizing impulse responses on real-world datasets. The methods are evaluated using several metrics, including phase error, amplitude error, envelope error, reverberation time (T60), clarity (C50), and early decay time (EDT). The results show that the proposed Acoustic Volume Rendering (AVR) method significantly outperforms existing methods across all metrics. The exceptionally high phase error of other methods indicates their failure to accurately model phase information in the synthesized impulse responses.

This table presents a quantitative comparison of different methods for synthesizing impulse responses on real-world datasets. The methods are evaluated using several metrics, including phase and amplitude errors, envelope error, reverberation time (T60), clarity (C50), and early decay time (EDT). The results show that the proposed Acoustic Volume Rendering (AVR) method significantly outperforms existing state-of-the-art methods, particularly in terms of phase accuracy. The high random phase error (1.62) for other methods indicates a failure to learn valid phase information, highlighting the unique capability of AVR.

This table presents a quantitative comparison of different methods for synthesizing impulse responses on real-world datasets. The methods are evaluated using several metrics, including phase error, amplitude error, envelope error, reverberation time (T60), clarity (C50), and early decay time (EDT). Lower values indicate better performance. The results demonstrate that Acoustic Volume Rendering (AVR) significantly outperforms existing state-of-the-art methods, particularly in terms of phase accuracy.

Full paper#