↗ OpenReview ↗ NeurIPS Proc. ↗ Chat

TL;DR#

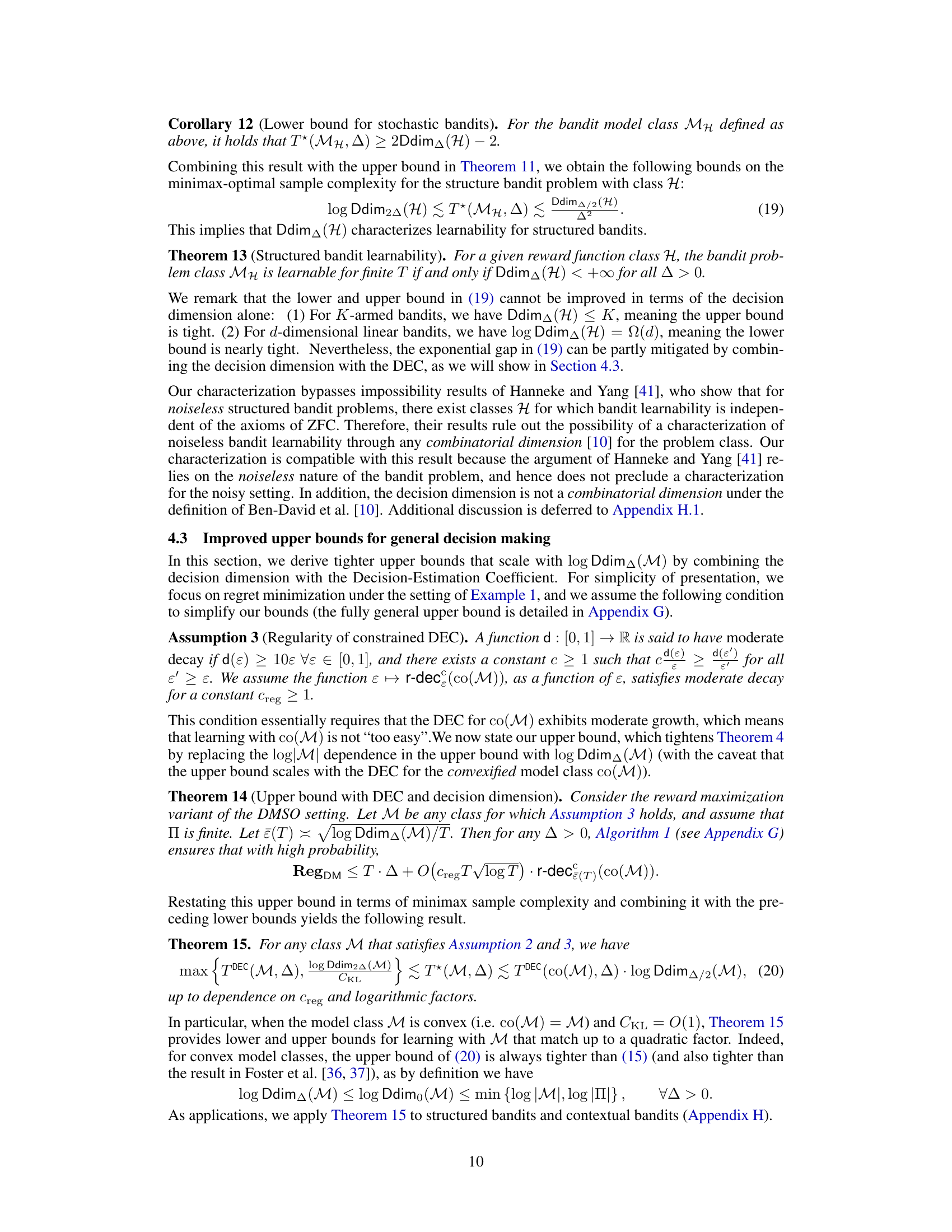

Classical lower bound techniques, while useful for passive statistical estimation, fall short for interactive decision-making algorithms like those used in bandit problems. The difficulty arises from the algorithm’s active role in data collection, making it hard to quantify information gain in a way that yields tight bounds. Previous work had addressed this, but with techniques that didn’t recover classical results or left gaps between upper and lower bounds.

This work introduces a unified information-theoretic lower bound framework, the interactive Fano method, that encompasses both classical methods (Assouad, Fano, and Le Cam) and interactive settings. A new complexity measure, the Decision Dimension, is presented. This measure facilitates tighter lower bounds and a complete characterization of learnability in structured bandits, closing the gap from previous work (up to polynomial factors) for convex model classes.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in bandit algorithms and interactive decision-making. It unifies existing lower bound frameworks, offering a novel approach that is both simpler and more broadly applicable. The introduction of the Decision Dimension provides a new tool to analyze structured bandit problems, directly impacting the design and analysis of learning algorithms. Further research could explore applications to diverse interactive learning scenarios and refining the gap between upper and lower bounds.

Visual Insights#

This figure presents the interactive Fano method which extends Fano’s inequality to interactive decision-making problems. It recovers classical lower bounds (Assouad, Fano, Le Cam) as special cases and integrates them with DEC-based lower bounds for interactive decision-making. The core idea is to generalize the separation condition in Fano’s inequality to an algorithm-dependent condition, leveraging a ‘ghost’ data generation from a reference distribution to quantify the separation between model distributions. The key result is a unified framework for characterizing learnability for any structured bandit problem using a new complexity measure: the decision dimension.

Full paper#